In this post I want to explore how to add a machine learning microservice to any existing mu.semte.ch project. I want to be able to upload an image and add the labels for that image to the SPARQL store.

TensorFlow

TensorFlow

TensorFlow’s inception library is great for image classification, it is a deep neural network that is trained to recognize objects. We can remove and retrain the outer layer easily (you can find a tutorial by Google on it). The microservice we will use wraps Inception and offers 3 routes:

- Add-Training-Example: through this route you tell the system that a certain image file is of a certain label

- Train: trains the model

- Classify: takes an image file and adds the classification to the triple store

For an exisiting mu.semte.ch project you will probably want to add this microservice together with a trained graph and the use the classify route. While it is possible for a production system to learn, this may not be the best idea. Computers love to train, so they allocate all their computational resources to that, rather than keeping the rest smoothly running.

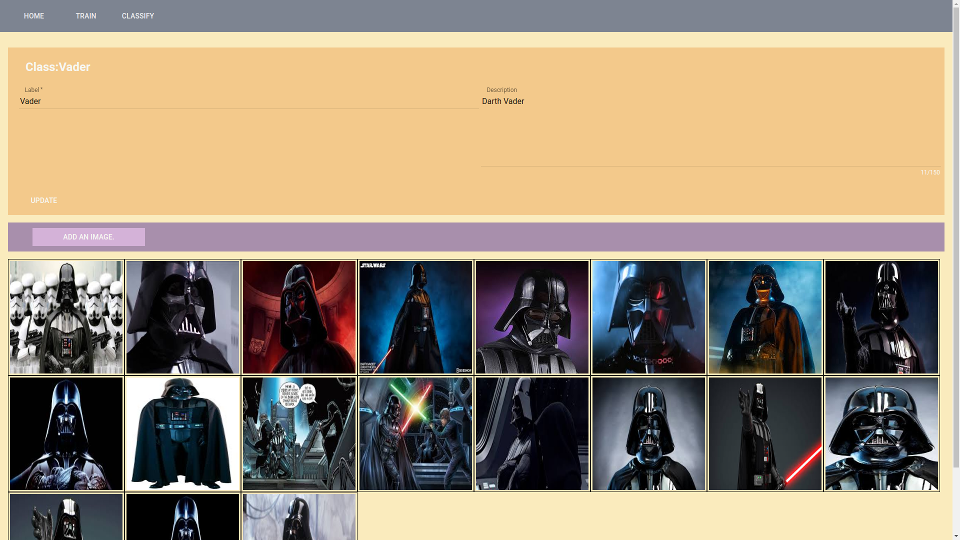

Mu-image-classifier demo

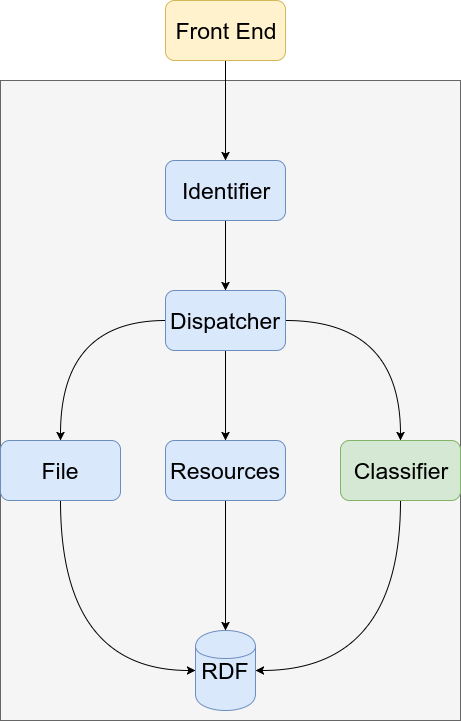

I have prepared a small example project where you can see and test the classifier microservice. After you clone this it has no trained graph so the classify route will not work. The architecture of this demo app is as in the image below:

Train the model

So you have cloned the repository, cd in to the directory and type:

docker-compose up

Open a browser and surf to localhost. Click on the training route. To train the model you have to add classes (min 2) and then add images for those classes. Be sure not to use any other image format than JPG. After you have prepared the training set you can click the “Train” button. Your computer will be busy for a while now. If you need a more detailed tutorial on how to train there is one on the main page of the project you have just cloned, check the ‘/’ route!

Add the image classifier to a generic mu.semte.ch project

After you have a trained graph it is really as simple as adding the image classifier microservice to your mu.semte.ch project and all will work. The assumption is though that the vocabulary that is used to describe the files in your triple store is the one that is documented on the mu-file-service.

Add this snippet to your docker-compose.yml:

classifier:

image: flowofcontrol/mu-tf-image-classifier

links:

- db:database

environment:

CLASSIFIER_TRESHHOLD: 0.7

volumes:

- ./data/classifier/tf_files:/tf_files

- ./data/classifier/images:/images

- ./data/files:/files

ports:

- "6006:6006"

As you can see we include 3 folders and expose a port. On that port you can also make use of TensorBoard, which is TensorFlow’s administration board and that gives you access to all kind of statistics about our image classifier. Add this to your dispatcher (if you want to be able to retrain, you also have to add the other routes):

match "/classify/*path" do

Proxy.forward conn, path, "http://classifier:5000/classify/"

end

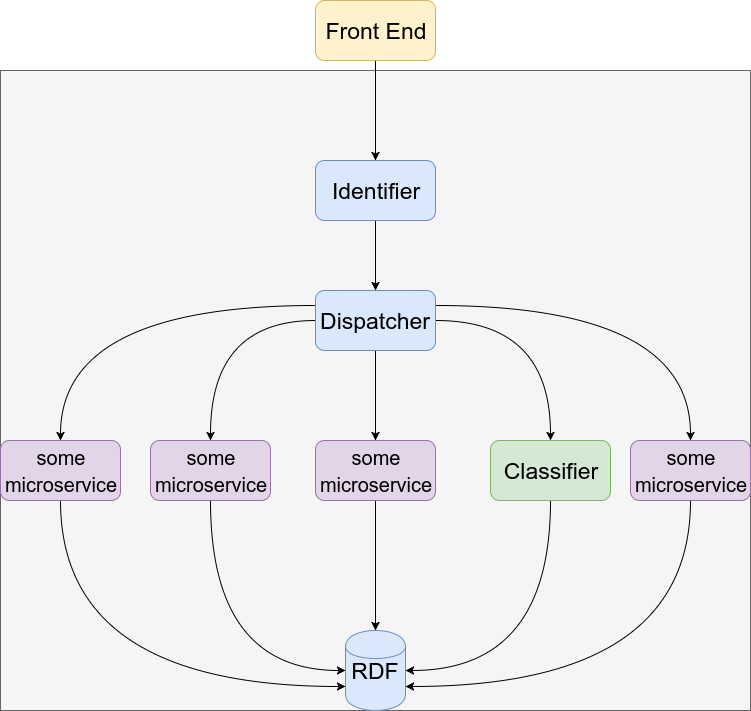

The architecture of your app might then look somewhat like:

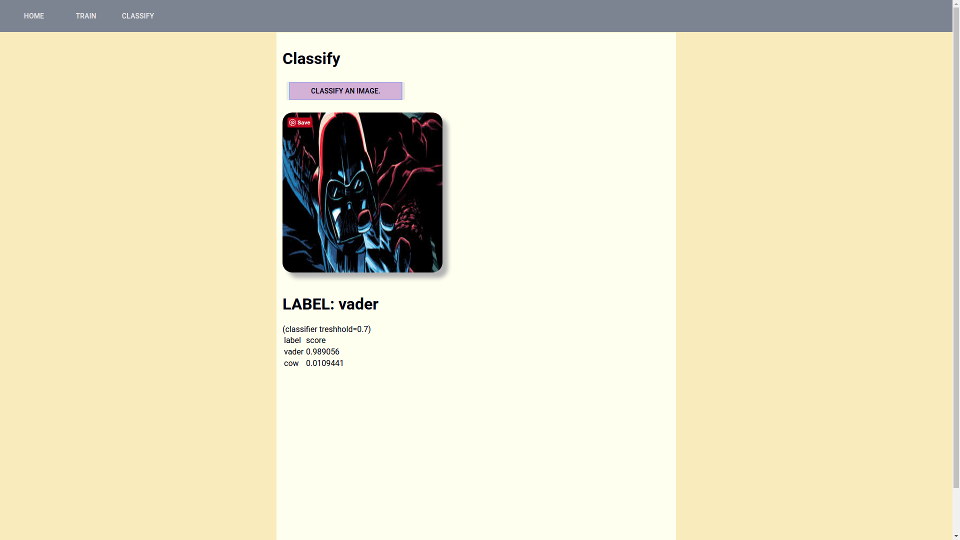

Classifying

If you then have an image you want to classify with it’s metadata correctly in the triple store then you can call the classify route and your image will be tagged. The response of the classify route will also tell you the probabilities for other labels. Below is what I get when I use the demo app to classify a random “Darth Vader” search result from images.google.com:

That’s all folks!